Google Gemini 2.5 Flash Review: Can You Have Both Speed and Deep Thinking? The Ultimate Experience on XXAI

In the field of AI, there has long been an "impossible triangle": fast response speed, strong reasoning capability, and low cost. Usually, you can only pick two. However, Google’s newly released Gemini 2.5 Flash seems determined to break this law.

As the newest "versatile all-rounder" in the Gemini series, Gemini 2.5 Flash is no longer just a lightweight model that sacrifices IQ for speed. It introduces a revolutionary "Controllable Thinking" mechanism and achieves a significant generational leap in long-text understanding, code generation, and multimodal processing.

Today, we will move beyond simple parameter comparisons and conduct a deep dive into this model’s performance on the XXAI from a practical application perspective, seeing if it truly deserves the title of "next-gen all-round assistant."

(Image Caption: Gemini 2.5 Flash aims to fuse ultra-fast response with deep reasoning capabilities, adapting to diverse task requirements. Source: Google DeepMind)

I. Core Evolution: What Makes Gemini 2.5 Flash Strong?

Compared to its predecessor (1.5 Flash), the upgrade in Gemini 2.5 Flash is comprehensive. It is no longer a simple "speedster," but an "intelligent strategist" capable of adjusting its brainpower according to task difficulty. Here are its four core advantages:

1. Revolutionary "Thinking Budget"

This is Gemini 2.5 Flash's most exciting feature. Traditional lightweight models tend to be "straightforward," often hallucinating when faced with complex logic. 2.5 Flash, however, supports thinking budget adjustments:

- Low Thinking Mode: For simple translation and summarization tasks, it maintains its signature millisecond-level response.

- Deep Thinking Mode: When facing complex math problems, code refactoring, or logical reasoning, it can consume more tokens to perform step-by-step Chain of Thought (CoT) reasoning, with performance approaching that of some flagship Pro models.

- What this means: You get a scalable "intelligent brain" for the cost of a lightweight model.

2. Million-Level Long Context Window (1M Context Window)

Gemini 2.5 Flash continues Google's dominance in the long-context domain. A 1 million token context window means it can digest the following in a single go:

- Video files up to 1 hour long;

- Codebases exceeding 30,000 lines;

- Technical PDF documents up to 700 pages thick.

It doesn't just read them; it accurately extracts key information, offering unparalleled advantages in legal contract analysis or academic paper reviews.

3. True Native Multimodal Interaction

Unlike other models that rely on external visual encoders, Gemini 2.5 Flash is natively multimodal. It can fluently understand video, audio, images, and text.

- Real-world Test: Upload a video of yourself assembling furniture and ask, "Where did I go wrong?" It can precisely pinpoint the frame in the video and provide guidance. This ability to "watch and understand" video greatly expands its application boundaries.

4. Powerful Output: Excellence in Both Image and Text

While it is an all-purpose model, its image generation capabilities (Flash Image) are not to be underestimated. It supports multi-image fusion, possesses superior text rendering capabilities (spelling words correctly within generated images), and maintains excellent character consistency. If you ask it to write a blog post with accompanying images, it can achieve efficient "text-image integrated" creation.

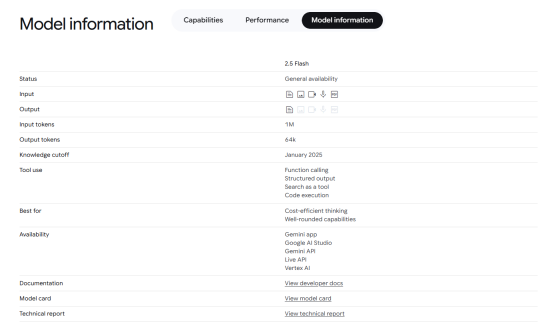

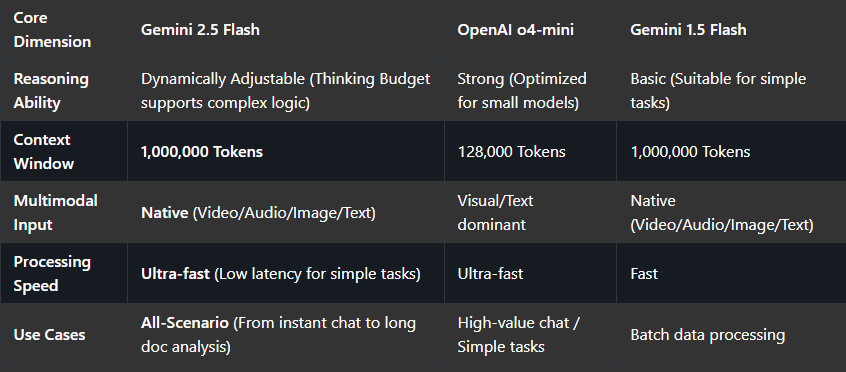

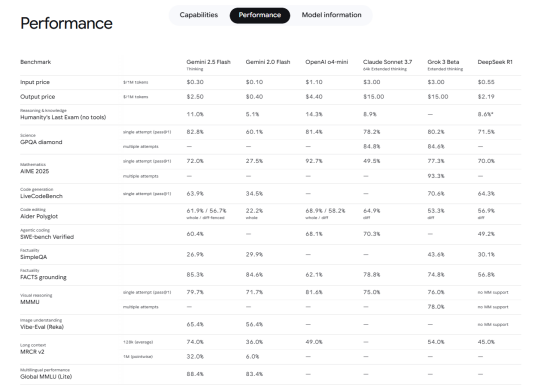

II. Data Comparison: Performance Analysis from an Objective Perspective

To give everyone a clearer understanding of Gemini 2.5 Flash's positioning, we selected top-performing models in the market for an objective parameter comparison.

Note: The following data is based on public benchmarks and actual experience, intended to showcase feature differences rather than a simple ranking of superiority.

Analysis Conclusion:

Analysis Conclusion:

- OpenAI o4-mini performs exceptionally well and is very stable for daily conversation and general tasks.

- Gemini 2.5 Flash's unique advantage lies in its massive context window and the controllability of its deep reasoning. If you need to analyze an entire book or process complex video content, Gemini 2.5 Flash's 1M Token capability is its core moat.

(Image Caption: The Gemini series demonstrates unique technical architectural advantages when handling long context and multimodal tasks.)

III. Why Use Gemini 2.5 Flash on the XXAI?

Although Google provides an official entry point, using Gemini 2.5 Flash on the XXAI offers a qualitative leap in user experience due to the platform's unique ecosystem integration.

1. The Ultimate Low Barrier: Only 1 Point Per Use

On the XXAI platform, calling upon the powerful features of Gemini 2.5 Flash (whether for chatting, coding, or analyzing long documents) costs only 1 point per request.

Compared to the maintenance costs of setting up your own API environment or dealing with complex pay-per-token billing, XXAI's point model is simple and transparent. It serves both high-frequency developers and casual users who want to experiment, keeping trial-and-error costs extremely low.

2. Efficient "Multi-Model Synergy" Workflow

Gemini 2.5 Flash is great, but it's not a magic bullet for everything. XXAI's biggest advantage is that you can switch models with a single click:

- Complex Logic Verification: First, use DeepSeek R1 or o1-preview for deep logical deduction and framework building.

- Long Doc Processing & Execution: Hand the deduced framework over to Gemini 2.5 Flash to utilize its ultra-long context capability for rapidly filling in content, analyzing background materials, or generating supporting code.

- This "Large Model Combo" doubles your work efficiency.

3. Privacy Meets Convenience

XXAI provides a stable, high-speed access channel for all users. At the same time, the platform offers strict privacy protection for user data, giving you peace of mind whether you are processing company documents or personal creative work.

IV. Real-World Application Scenarios

What can 1 point get you with Gemini 2.5 Flash on XXAI?

- Scenario A: The Full-Stack Developer's Debugging Miracle

Throw a codebase with thousands of lines of errors at it directly (leveraging the long window advantage) and enable "Thinking Mode." It not only locates the bug but also explains why the error occurred and provides the fix.

- Scenario B: The Video Vlogger's Efficiency Tool

Upload a 20-minute product launch video and ask Gemini 2.5 Flash to extract "5 Core Selling Points" and generate an engaging, "Little Red Book" (lifestyle influencer) style promotional post.

- Scenario C: The Academic's Research Companion

Upload 10 related PDF papers and ask it to perform a cross-comparison, generating a literature review complete with citation sources.

(Image Caption: Gemini 2.5 Flash understands complex code structures and performs debugging, significantly boosting development efficiency.)

V. Conclusion

The emergence of Gemini 2.5 Flash marks a shift in AI models from simply "warring over parameters" to competing on "efficiency" and "scenarios." It possesses the depth to process million-word masterpieces, the speed of millisecond-level responses, and the flexibility to adjust its intelligence based on the task.

For XXAI users, unlocking such an "all-around powerhouse" for just 1 point is undoubtedly the highest value choice currently available. Whether you are a professional dealing with complex documents or a developer chasing cutting-edge technology, Gemini 2.5 Flash deserves a spot in your core toolkit.

Log in to the XXAI now and experience the efficiency and intelligence of Gemini 2.5 Flash!